Introduction

Industries such as construction, oil & gas, maritime, aerospace, and infrastructure maintenance increasingly rely on AI-based inspection. However, traditional deep-learning models can be slow when deployed on edge devices like drones, embedded systems, or industrial PCs. As datasets grow and inspection demands increase, the need for faster inference becomes critical.

This is where ONNX and NVIDIA TensorRT dramatically improve performance. They help engineers convert heavy models—like YOLOv8/v9, Detectron2, DeepCrack, or industrial defect detectors—into highly optimized, deployable versions suitable for real-time inspection.

What is ONNX?

ONNX (Open Neural Network Exchange) is an open standard that allows AI models to be transferred between different frameworks.

You can train a model in PyTorch or TensorFlow and export it to ONNX to run on:

- edge devices

- embedded GPUs

- Jetson boards

- cloud inference engines

- custom applications (like Qt/C++ apps)

Why ONNX matters for inspection

- Framework independence

You don’t have to depend on PyTorch runtime during deployment. - Faster inference

ONNX Runtime optimizes computation graphs. - Hardware acceleration

Easily integrate CUDA, TensorRT, OpenVINO, CoreML, and DirectML backends.

For field inspection systems, this means more FPS, lower latency, and lower hardware cost.

What is TensorRT?

TensorRT is NVIDIA’s deep learning inference optimizer.

It takes AI models and transforms them into ultra-fast GPU engines, using techniques like:

- layer fusion

- kernel auto-tuning

- quantization (FP16, INT8)

- GPU-specific optimizations

When paired with ONNX, TensorRT gives the maximum possible performance on NVIDIA GPUs.

Where TensorRT is ideal

- drone-based inspections

- live leak detection

- crack mapping from video feeds

- real-time corrosion detection on pipelines

- ship hull inspection

- refinery safety monitoring

- manufacturing QC stations

Why ONNX + TensorRT Is Perfect for Crack, Corrosion, and Oil-Spill Detection

Models used for industrial inspection must handle:

- very thin crack lines

- dark/bright environment changes

- corroded textured surfaces

- reflective oil patches

- water/metal/paint background variations

ONNX + TensorRT helps by delivering:

1️⃣ High FPS for live video

With optimizations, YOLO/Segmentation models can jump from:

- 20 FPS → 80+ FPS (FP16)

- 80 FPS → 150+ FPS (INT8)

- Jetson Xavier: 8 FPS → 45 FPS

This makes continuous scanning possible.

2️⃣ Reduced hardware cost

Instead of buying expensive GPUs, industries can:

- deploy models on Jetson Nano/Xavier/Orin

- run on mid-range RTX cards

- reduce server requirements

- embed inspection AI inside your own custom tools

This is ideal for Indian industries, where budgets matter.

3️⃣ Better accuracy with optimized execution

TensorRT ensures minimal jitter and consistent response times, which is extremely valuable for:

- drone flights

- robotic arms checking corrosion

- pipeline crawler robots

- underwater inspection systems

4️⃣ Deployable anywhere

ONNX models can be integrated with:

- Qt/QML/C++ apps (desktop or embedded)

- cloud microservices

- Docker containers

- field-inspection dashboards

- mobile devices (with ONNX Mobile)

Perfect for building your own WebODM-like platform with defect detection.

Performance Gains in Real Scenarios

Here are typical improvements seen in inspection workloads:

| Model | Original FPS | ONNX Runtime | TensorRT (FP16) | TensorRT (INT8) |

|---|---|---|---|---|

| YOLOv8s | 38 FPS | 55 FPS | 92 FPS | 130 FPS |

| YOLOv9m | 22 FPS | 38 FPS | 70 FPS | 110 FPS |

| DeepCrack | 7 FPS | 14 FPS | 32 FPS | 48 FPS |

| Detectron2 corrosion | 12 FPS | 26 FPS | 41 FPS | 60 FPS |

These numbers vary by GPU, but the improvement trend is consistent.

Workflow: From Training to TensorRT Engine

A typical industrial inspection workflow looks like this:

Step 1: Train model

Use PyTorch for models like YOLOv8/v9 or Detectron2.

Step 2: Export to ONNX

yolo export model=yolov8m.pt format=onnx

Step 3: Inspect & simplify ONNX model

Use tools like:

- Netron

- onnxsim

Step 4: Convert ONNX → TensorRT

trtexec --onnx=model.onnx --fp16 --saveEngine=model_fp16.engine

Step 5: Integrate into application

Use TensorRT C++/Python API or ONNX Runtime with TensorRT backend.

This workflow becomes the foundation for building real-time inspection software.

Use Cases

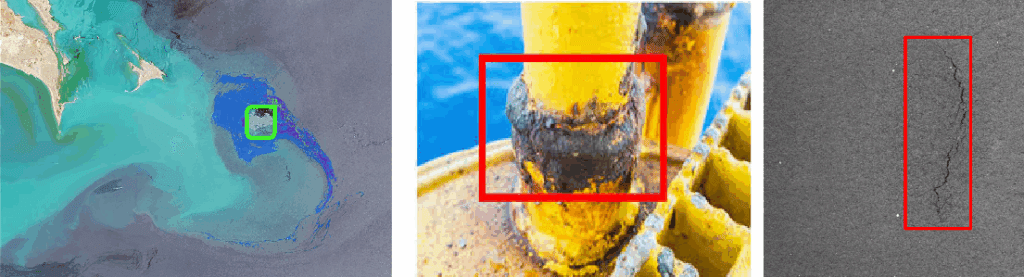

✔ Crack Detection (Civil & Infrastructure)

Detect hairline cracks in:

- bridges

- dams

- roads

- buildings

- tunnels

✔ Corrosion Detection (Oil & Gas / Industrial)

Identify:

- rust patches

- peeling paint

- steel degradation

- pipeline thinning

✔ Oil Spill Detection

Analyze:

- refinery leakages

- marine oil patches

- spill spread analysis

✔ Manufacturing Quality Inspection

Spot:

- broken parts

- welding defects

- surface anomalies

Conclusion

By combining ONNX and TensorRT, industries can dramatically accelerate their inspection models. Whether it’s detecting cracks, corrosion, broken parts, or oil spills, this workflow ensures:

- real-time inference

- lower hardware cost

- reliable performance

- easy deployment to field devices

- scalable cloud/on-premise integration

For companies building inspection tools, adopting ONNX + TensorRT is one of the smartest upgrades for 2025 and beyond.