Introduction

In today’s AI-driven world, most intelligent assistants rely on cloud connectivity — but what if you could have the power of ChatGPT completely offline?

With tools like Ollama, it’s now possible to run large language models (LLMs) locally on your system, without depending on the internet. This ensures data privacy, fast response, and complete control over your AI environment.

At Manya Technologies, we leverage this capability to build custom offline AI assistants tailored for enterprise, defense, and development use cases — ensuring high performance, security, and adaptability.

What Is Ollama?

Ollama is a lightweight platform that lets you run open-source language models (like Llama 3, Mistral, or Codellama) locally on Windows, macOS, or Linux.

It handles model downloads, quantization, and execution efficiently — allowing you to use ChatGPT-like capabilities without any cloud dependency.

Use Cases for Offline ChatGPT

Offline AI systems are especially useful where internet connectivity is restricted or data sensitivity is high.

Some common applications include:

- 🧠 Programming & Development Assistants in secure labs

- 🛰️ Defense or Aerospace systems requiring complete isolation

- 📑 Document summarization and parsing without external uploads

- 🗣️ Voice-based command and control for tactical systems

- 💼 Enterprise automation with on-premise AI infrastructure

Steps to Set Up an Offline ChatGPT Using Ollama

You can easily create your own offline assistant in a few steps:

1. Install Ollama

Visit https://ollama.ai and download the installer for your OS (Windows, Linux, or macOS).

2. Pull an AI Model

Once installed, open the terminal and pull a model such as:

ollama pull llama3

or a code-focused model:

ollama pull codellama

3. Run the Model Locally

Launch the model with:

ollama run llama3

You’ll now have a local ChatGPT-like prompt running entirely on your system.

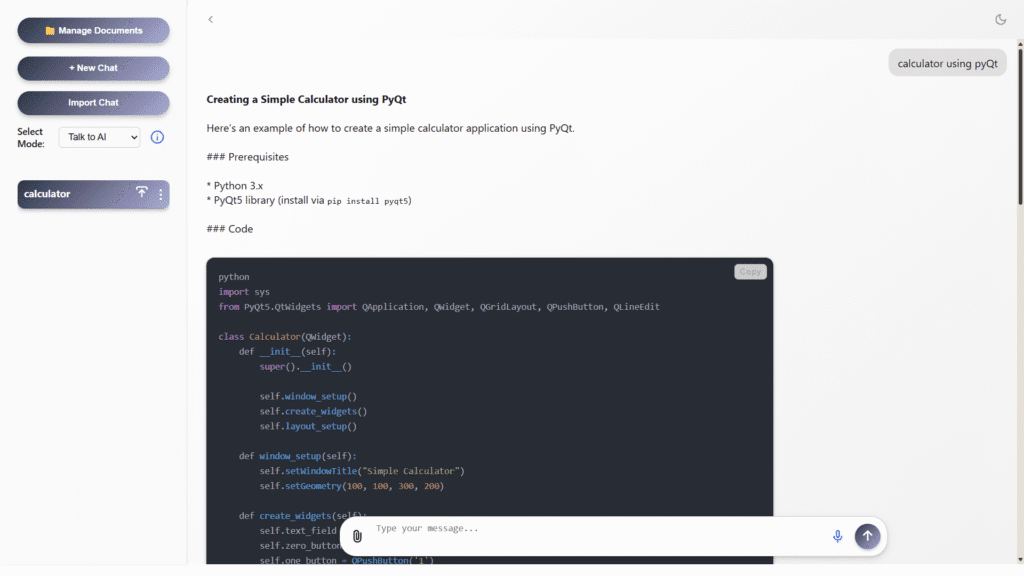

4. Integrate with Custom GUI

Developers can connect Ollama’s local API to custom interfaces using Qt, Electron, or React to build powerful offline AI tools with voice, code, and document capabilities.

Custom Offline AI Assistant by Manya Technologies

At Manya Technologies, we’ve developed a specialized Offline Programming Assistant built on Ollama and open-source AI frameworks — optimized for secure and high-performance use cases.

Key Features:

- 💻 Complete Offline Solution – Works without internet access

- 🧩 Multi-Language Support – C, C++, Python, JavaScript, Qt, .NET, and more

- 🛡️ Ideal for Defense and Restricted Environments – Zero cloud dependency

- 📄 Document Upload Support – Parse, summarize, and analyze files offline

- 🔒 Data Privacy Guaranteed – All processing remains on your machine

- ♾️ Unlimited Queries – No usage limits or subscription restrictions

- 🔁 Import/Export Conversations – Save and continue chat sessions

- 🎙️ Voice Input Integration – Supports voice-to-text and verbal commands

- ⚙️ Fully Customizable – Tailored UI, domain models, and voice options

This solution transforms your workstation into a self-contained AI companion, enhancing productivity while maintaining full data control.

Future Possibilities

Manya Technologies continues to expand its offline AI ecosystem — integrating models for:

- 🧠 Intelligent Programming Assistance

- 📚 Offline Document Parsing and Knowledge Extraction

- 🗣️ Speech Recognition and Voice Interaction

- 🗺️ GIS and Defense-specific AI Modules

Conclusion

Offline AI assistants are the future of secure, intelligent computing — enabling organizations to harness AI without compromising on privacy or compliance.

With Ollama and custom solutions from Manya Technologies, you can deploy ChatGPT-like capabilities entirely offline, tailored to your operational needs.

👉 Contact Manya Technologies today to build your custom offline AI assistant — whether for programming, document processing, or speech recognition — and experience the power of secure, localized intelligence.